What is Augmented Reality?

Augmented reality (AR) is a live direct or indirect view of a physical, real-world environment whose elements are “augmented” by computer-generated or extracted real-world sensory input such as sound, video, graphics or GPS data. It is related to a more general concept called computer-mediated reality, in which a view of reality is modified (possibly even diminished rather than augmented) by a computer. Augmented reality enhances one’s current perception of reality, whereas in contrast, virtual reality replaces the real world with a simulated one. Augmentation techniques are typically performed in real time and in semantic context with environmental elements, such as overlaying supplemental information like scores over a live video feed of a sporting event.

With the help of advanced AR technology (e.g. adding computer vision and object recognition) the information about the surrounding real world of the user becomes interactive and digitally manipulable. Information about the environment and its objects is overlaid on the real world. This information can be virtual or real, e.g. seeing other real sensed or measured information such as electromagnetic radio waves overlaid in exact alignment with where they actually are in space. Augmented reality brings out the components of the digital world into a person’s perceived real world. One example is an AR Helmet for construction workers which display information about the construction sites. The first functional AR systems that provided immersive mixed reality experiences for users were invented in the early 1990s, starting with the Virtual Fixtures system developed at the U.S. Air Force’s Armstrong Labs in 1992. Augmented Reality is also transforming the world of education, where content may be accessed by scanning or viewing an image with a mobile device.

Technology

1. Hardware

Hardware components for augmented reality are: processor, display, sensors and input devices. Modern mobile computing devices like smartphones and tablet computers contain these elements which often include a camera and MEMS sensors such as accelerometer, GPS, and solid state compass, making them suitable AR platforms.

2. Display

Various technologies are used in Augmented Reality rendering including optical projection systems, monitors, hand held devices, and display systems worn on the human body. A head-mounted display (HMD) is a display device paired to the forehead such as a harness or helmet. HMDs place images of both the physical world and virtual objects over the user’s field of view. Modern HMDs often employ sensors for six degrees of freedom monitoring that allow the system to align virtual information to the physical world and adjust accordingly with the user’s head movements. HMDs can provide VR users mobile and collaborative experiences. Specific providers, such as uSens and Gestigon, are even including gesture controls for full virtual immersion.

3. Eyeglasses

AR displays can be rendered on devices resembling eyeglasses. Versions include eyewear that employs cameras to intercept the real world view and re-display its augmented view through the eye pieces and devices in which the AR imagery is projected through or reflected off the surfaces of the eyewear lens pieces.

4. HUD

A head-up display, also known as a HUD, is a transparent display that presents data without requiring users to look away from their usual viewpoints. A precursor technology to augmented reality, heads-up displays were first developed for pilots in the 1950s, projecting simple flight data into their line of sight thereby enabling them to keep their “heads up” and not look down at the instruments. Near eye augmented reality devices can be used as portable head-up displays as they can show data, information, and images while the user views the real world. Many definitions of augmented reality only define it as overlaying the information. This is basically what a head-up display does; however, practically speaking, augmented reality is expected to include registration and tracking between the superimposed perceptions, sensations, information, data, and images and some portion of the real world. CrowdOptic, an existing app for smartphones, applies algorithms and triangulation techniques to photo metadata including GPS position, compass heading, and a time stamp to arrive at a relative significance value for photo objects. CrowdOptic technology can be used by Google Glass users to learn where to look at a given point in time.

5. Contact lenses

Contact lenses that display AR imaging are in development. These bionic contact lenses might contain the elements for display embedded into the lens including integrated circuitry, LEDs and an antenna for wireless communication. The first contact lens display was reported in 1999 and subsequently, 11 years later in 2010/2011 Another version of contact lenses, in development for the U.S. Military, is designed to function with AR spectacles, allowing soldiers to focus on close-to-the-eye AR images on the spectacles and distant real world objects at the same time. The futuristic short film Sight features contact lens-like augmented reality devices.

6. Spatial

Spatial Augmented Reality (SAR) augments real-world objects and scenes without the use of special displays such as monitors, head mounted displays or hand-held devices. SAR makes use of digital projectors to display graphical information onto physical objects. The key difference in SAR is that the display is separated from the users of the system. Because the displays are not associated with each user, SAR scales naturally up to groups of users, thus allowing for collocated collaboration between users. Examples include shader lamps, mobile projectors, virtual tables, and smart projectors. Shader lamps mimic and augment reality by projecting imagery onto neutral objects, providing the opportunity to enhance the object’s appearance with materials of a simple unit- a projector, camera, and sensor.

Other applications include table and wall projections. One innovation, the Extended Virtual Table, separates the virtual from the real by including beam-splitter mirrors attached to the ceiling at an adjustable angle. Virtual showcases, which employ beam-splitter mirrors together with multiple graphics displays, provide an interactive means of simultaneously engaging with the virtual and the real. Many more implementations and configurations make spatial augmented reality display an increasingly attractive interactive alternative. An SAR system can display on any number of surfaces of an indoor setting at once. SAR supports both a graphical visualization and passive haptic sensation for the end users. Users are able to touch physical objects in a process that provides passive haptic sensation.

7. Software and algorithms

A key measure of AR systems is how realistically they integrate augmentations with the real world. The software must derive real world coordinates, independent from the camera, from camera images. That process is called image registration which uses different methods of computer vision, mostly related to video tracking. Many computer vision methods of augmented reality are inherited from visual odometry.

Usually those methods consist of two parts. The first stage is to detect interest points, fiducial markers or optical flow in the camera images. This step can use feature detection methods like corner detection, blob detection, edge detection or thresholding and/or other image processing methods. The second stage restores a real world coordinate system from the data obtained in the first stage. Some methods assume objects with known geometry (or fiducial markers) are present in the scene. In some of those cases the scene 3D structure should be precalculated beforehand. If part of the scene is unknown simultaneous localization and mapping (SLAM) can map relative positions. If no information about scene geometry is available, structure from motion methods like bundle adjustment are used. Mathematical methods used in the second stage include projective (epipolar) geometry, geometric algebra, rotation representation with exponential map, kalman and particle filters, nonlinear optimization, robust statistics.

Application

Augmented reality has many applications. First used for military, industrial, and medical applications, by 2012 its use expanded into entertainment and other commercial industries. By 2016, powerful mobile devices allowed AR to become a useful learning aid even in primary schools.

1. Literature

The first description of AR as we know it today was in Virtual Light, the 1994 novel by William Gibson. In 2011, AR was blended with poetry by ni ka from Sekai Camera in Japan, Tokyo. The prose of these AR poems come from Paul Celan, “Die Niemandsrose”, expressing the mourning of “3.11,” March 2011 Tōhoku earthquake and tsunami.

2. Archaeology

AR was applied to aid archaeological research. By augmenting archaeological features onto the modern landscape, AR allowed archaeologists to formulate possible site configurations from extant structures. Computer generated models of ruins, buildings, landscapes or even ancient people have been recycled into early archaeological AR applications.

3. Architecture

AR can aid in visualizing building projects. Computer-generated images of a structure can be superimposed into a real life local view of a property before the physical building is constructed there; this was demonstrated publicly by Trimble Navigation in 2004. AR can also be employed within an architect’s workspace, rendering into their view animated 3D visualizations of their 2D drawings. Architecture sight-seeing can be enhanced with AR applications allowing users viewing a building’s exterior to virtually see through its walls, viewing its interior objects and layout.

With the continual improvements to GPS accuracy, businesses are able to use augmented reality to visualize georeferenced models of construction sites, underground structures, cables and pipes using mobile devices. Augmented reality is applied to present new projects, to solve on-site construction challenges, and to enhance promotional materials. Examples include the Daqri Smart Helmet, an Android-powered hard hat used to create augmented reality for the industrial worker, including visual instructions, real time alerts, and 3D mapping.

4. Visual art

AR applied in the visual arts allows objects or places to trigger artistic multidimensional experiences and interpretations of reality. AR technology aided the development of eye tracking technology to translate a disabled person’s eye movements into drawings on a screen.

5. Commerce

The AR-Icon can be used as a marker on print as well as on online media. It signals the viewer that digital content is behind it. The content can be viewed with a smartphone or tablet. AR is used to integrate print and video marketing. Printed marketing material can be designed with certain “trigger” images that, when scanned by an AR-enabled device using image recognition, activate a video version of the promotional material. A major difference between Augmented Reality and straight forward image recognition is that you can overlay multiple media at the same time in the view screen, such as social media share buttons, the in-page video even audio and 3D objects. Traditional print-only publications are using Augmented Reality to connect many different types of media.

AR can enhance product previews such as allowing a customer to view what’s inside a product’s packaging without opening it. AR can also be used as an aid in selecting products from a catalog or through a kiosk. Scanned images of products can activate views of additional content such as customization options and additional images of the product in its use.

6. Video games

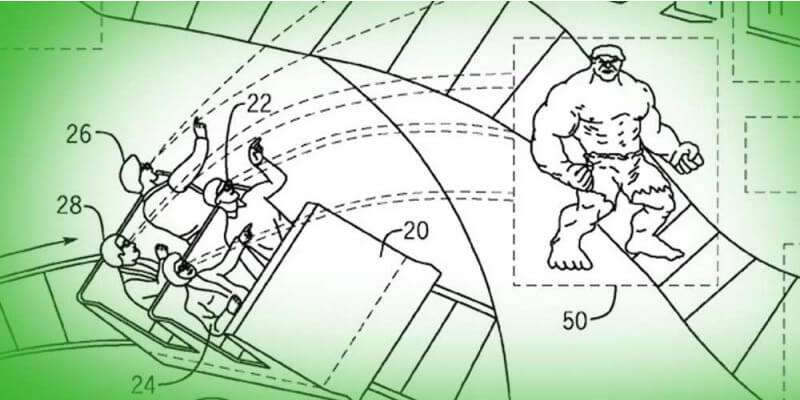

The gaming industry embraced AR technology. A number of games were developed for prepared indoor environments, such as AR air hockey, Titans of Space, collaborative combat against virtual enemies, and AR-enhanced pool table games. Augmented reality allowed video game players to experience digital game play in a real world environment. Companies and platforms like Niantic and LyteShot emerged as major augmented reality gaming creators. Niantic is notable for releasing the record-breaking Pokémon Go game.

7. Industrial design

AR allowed industrial designers to experience a product’s design and operation before completion. Volkswagen used AR for comparing calculated and actual crash test imagery. AR was used to visualize and modify car body structure and engine layout. AR was also used to compare digital mock-ups with physical mock-ups for finding discrepancies between them.

How Augmented Reality Works?

Augmented realities can be displayed on a wide variety of displays, from screens and monitors, to handheld devices or glasses. Google Glass and other head-up displays (HUD) put augmented reality directly onto your face, usually in the form of glasses. Handheld devices employ small displays that fit in user’s hands, including smartphones and tablets. As reality technologies continue to advance, augmented reality devices will gradually require less hardware and start being applied to things like contact lenses and virtual retinal displays.

Key components of an Augmented Reality Device

1. Sensors and Cameras:

Sensors are usually on outside of the augmented reality devices, and gather’s user’s real world interaction and communicate them to be processed and interpreted. Cameras are also located on the outside of the device, and visually scan to collect data about the surrounding area. The devices take this information, which often determines where surrounding physical objects are located, and then formulates a digital model to determine appropriate output. In the case of Microsoft Hololens, specific cameras perform specific duties, such as depth sensing. Depth sensing cameras work in tandem with two “environment understanding cameras” on each side of the device. Another common type of camera is a standard several megapixel camera (similar to the ones used in smartphones) to record pictures, videos, and sometimes information to assist with augmentation.

2. Projection:

While “Projection Based Augmented Reality” is a category in-itself, we are specifically referring to a miniature projector often found in a forward and outward-facing position on wearable augmented reality headsets. The projector can essentially turn any surface into an interactive environment. As mentioned above, the information taken in by the cameras used to examine the surrounding world, is processed and then projected onto a surface in front of the user; which could be a wrist, a wall, or even another person. The use of projection in augmented reality devices means that screen real estate will eventually become a lesser important component. In the future, you may not need an iPad to play an online game of chess because you will be able to play it on the tabletop in front of you.

3. Processing:

Augmented reality devices are basically multi-supercomputers packed in wearable devices. These devices require significant computer processing power and utilize many of the same component that our smartphones do. These devices require significant computer processing power and utilize many of the same components that our smartphones do. These components include a CPU, a GPU, flash memory, RAM, Bluetooth/Wifi microchip, global positioning system (GPS) microchip, and more. Advanced augmented reality devices, such as the Microsoft Hololens utilize an accelerometer (to measure the speed in which your head is moving), a gyroscope (to measure the tilt and orientation of your head), and a magnetometer (to function as a compass and figure out which direction your head is pointing) to provide for truly immersive experience.

4. Reflection:

Mirrors are used as an augmented reality devices to assist with way your eye view you virtual images. Some augmented reality devices may have “an array of small curbed mirrors”, and others may have a simple double sided mirror with one face reflecting incoming light to side-mounted camera and the other surface reflecting light from the side mounted display to the user’s camera. A so-called, light engine emits lights towards two separate lenses. The light hit those layers and then enters the eye at specific angles, intensities and colors, producing a final holistic image on eye’s retina.

How Augmented Reality is controlled?

Augmented Reality devices are more often controlled either by touch a pad or voice commands. The touch pads are often somewhere on the device that is easily reachable. They work by sensing the pressure changes that occur when a user taps or swipes a specific spot. Voice commands work very similar to the way they do on our smartphones. A tiny microphone on the device will pick up your voice and then a microprocessor will interpret the commands. Voice commands, such as those on the Google Glass augmented reality device, are preprogrammed from a list of commands that you can use. On the Google Glass, nearly all of them start with “OK, Glass,” which alerts your glasses that a command is soon to follow.

Types of Augmented Reality

1. Marker based Augmented Reality: Marker based augmented reality, camera and some type of visual marker, such as QR and 2D codes, to produce a result only when the marker is sensed by a reader. Marker based applications use a camera on the device to distinguish a marker from any other real world object. Distinct, but simple patterns (such as a QR code) are used as the markers, because they can be easily recognized and do not require a lot of processing power to read. The position and orientation is also calculated, in which some type of content and/or information is then overlaid the marker.

2. Marker-less Augmented Reality: As one of the mostly used application of augmented reality marker less (also called location-based, position-based, or GPS) augmented reality, uses a GPS, digital compass, velocity meter, or accelerometer which is embedded in the device to provide data based on your location. A strong force behind marker less augmented reality technology is the wide availability of smartphones and location detection features they provide. It is most commonly used for mapping directions, finding nearby businesses, and other location-centric mobile applications.

3. Projection Based Augmented Reality: Projection based augmented reality works by projecting artificial light onto real world surfaces. Projection based augmented reality applications allow for human interaction by sending light onto a real world surface and then sensing the human interaction (i.e. touch) of that projected light. Detecting the user’s interaction is done by differentiating between an expected (or known) projection and the altered projection (caused by the user’s interaction). Another interesting application of projection based augmented reality utilizes laser plasma technology to project a three-dimensional (3D) interactive hologram into mid-air.

4. Superimposition based Augmented Reality: superimposition based augmented reality either partially or fully replaces the original view of an object with a newly augmented view of the same object. In superimposition based augmented reality, object recognition plays a vital role because the application cannot replace the original view with an augmented one if it cannot determine what the object is. A strong consumer-facing example of superimposition based augmented reality could be found in the Ikea augmented reality furniture catalogue. By downloading an app and scanning selected pages in their printed or digital catalogue, users can place virtual like a furniture in their own home with the help of augmented reality.

Augmented reality comes to mobile phones

Augmented reality, or AR, is a term that refers to technology that superimposes computer-generated content over live images viewed through cameras. The technology, which has been used in gaming and in military applications on computers, has been around for years. But thanks to more sophisticated devices, faster wireless broadband networks, and new developments at the chip level by companies like Qualcomm, it has become inexpensive enough to put into smartphones and tablets.

Even though these are still the early days for the technology chip vendors like Qualcomm are just now giving demonstrations augmented reality could have a major impact on smartphones in the coming years.

“The idea that a mobile device knows where I am and can access, manipulate, and overlay that information on real images that are right in front of me really gets my science fiction juices flowing,” said Mark Donovan, senior analyst at ComScore. “It’s just beginning now, and it will likely be one of the most interesting trends in mobile in the next few years.”

Just as location-based services have begun to change how wireless subscribers use their cell phones and marketers reach an increasingly mobile audience, augmented reality will go a step further, bringing a wealth of collected data to users’ fingertips.

Today, GPS and other location-based technologies allow people to track and find friends on the go. It allows them to “check in” at particular locations. In other words, wireless subscribers provide information about their surroundings, such as where they are, and that information is stored and shared with others via the Internet cloud. That information can be used so friends can locate you, or it can be used by marketers to send you coupons and other promotions.But as these location services are married to augmented-reality technology, mobile subscribers have the opportunity to allow the crowd-sourced data about a particular location to tell them about their surroundings. Think of Flickr and Wikipedia information layered on top of real-time images you can see via your phone’s camera and displayed on your smartphone’s screen.

For example, imagine you’re on a walking tour of New York City. You could stand in front of 97 Orchard St., the location for the Lower East Side Tenement Museum, and get a virtual tour guide to tell you about the building and its history. Images of how the neighborhood looked 100 years could be overlayed on top of the existing image of the neighborhood on your mobile phone screen.

Facts and other information about the neighborhood and the people who lived there could be embedded onto the screen. And by clicking on an icon, you could hear audio, see video, or read text about what happened there. Other visitors could leave virtual comments about the tour. Maybe someone would leave a virtual note letting you know of a good pizza place a block away. That pizza joint might also insert an icon offering you a coupon. AR could also be very useful in education. Biology students, for example, could use an augmented-reality application and a smartphone to get additional information about what they are seeing as they dissect a frog.

Toying with AR

These are just a few examples of how AR on a handheld device could be used to enhance user’s experience. Matt Grob, Qualcomm’s senior vice president of engineering and head of corporate research development, recently showed off the company’s augmented-reality technology at the EmTech conference at MIT in Cambridge, Mass. Grob and other Qualcomm execs have been demonstrating the new technology for the past few months at various conferences to drum up interest and excitement.

The company’s Snapdragon processors and a new software developer kit for Android smartphones will help provide the necessary foundation for building and using augmented-reality technology on cell phones. In the Qualcomm demo, the company teamed with toy maker Mattel to create a virtual update to a classic game called Rock ‘Em Sock ‘Em Robots. Using Qualcomm’s technology and the embedded smartphone camera, players could see superimposed virtual robots on their smartphone displays. The robots appeared in the ring, which was a piece of paper printed with the static image of the ring and its ropes. Players used the buttons on their handsets to throw punches, and their robots actually moved around the ring as the players physically circled the table where the image of the ring was placed.

Grob said gaming offers a huge opportunity for Qualcomm and its augmented-reality technology. But he said others will also see the usefulness of the technology over time.

“Augmented reality certainly enhances the gaming experience,” he said. “But it’s also a nice way for advertisers to improve their reach to consumers by bringing in contextual awareness between the device and the physical world.”

For example, marketers can insert animated coupons on top of real-life images of their products in stores. So when consumers walk by a box of cereal in the grocery store and look into their phone’s screen, they could get an instant rebate. While augmented-reality technology has been around for a while and is already being used in some PC games, Qualcomm has advanced the technology by making it more affordable and usable for portable devices. Grob said Qualcomm’s software developer kit for Android devices, which was announced earlier this summer, will be available in coming weeks. This will allow game developers and others to start developing applications using the augmented-reality technology. And these games and apps should be able to operate on current-generation Android smartphones. Grob said the technology is already mature enough for commercial use. The demonstration of the Rock’em Sock’em game used Google’s Nexus One Android handsets. Qualcomm’s software developer kit will initially be available for Android, but other mobile platforms are expected to be added later.

Zuck says augmented reality will flourish on phones before glasses

Snapchat selfie lenses and Pokemon Go, not Magic Leap and HoloLens. Smartphones are how Mark Zuckerberg sees augmented reality going mainstream, rather than potentially awkward headsets and glasses. That’s an important clue to how Facebook will adapt to this next computing platform. Facebook has watched this play out with the Oculus Rift vs the Gear VR. Despite the Rift being much more powerful, it’s tethered at home, expensive, and requires a high-grade gaming computer most people don’t own. The Oculus-made Gear VR, a cheap headset powered by popular Samsung Galaxy phones, is mobile, comparatively cheap, and runs on a device tons of people already own. That’s why the Gear VR had already reached 1 million active users while the Rift is confined to hardcore gamers and VR enthusiasts.

Snapchat’s animated selfie lenses and Pokemon Go prove that people are eager to play with VR as long as it doesn’t require special hardware. Sure the object recognition and overplayed graphics aren’t the most technologically impressive uses of VR. But Facebook, Instagram, Messenger, and WhatsApp aren’t necessarily the most technologically impressive uses of mobile, yet they became the most popular apps. Facebook has already begun to publicly invest in AR through the acquisition of MSQRD, an app that makes animated selfie filters similar to Snapchat’s. And Zuckerberg has repeatedly said AR is a big piece of Facebook’s long-term roadmap.

Apps that augment the videos you share to Facebook may be the company’s next step. And since the Gear VR can take advantage of the rear camera on your Galaxy, it could simulate augmented reality by combining the real-world image with added effects though this will look blurry and have more lag compared to those graphic appearing on a transparent visor like HoloLens.

Augmented Reality on Cell Phones

While it may be some time before you buy a device like SixthSense, more primitive versions of augmented reality are already here on some cell phones, particularly in applications for the iPhone and phones with the Android operating system. In the Netherlands, cell phone owners can download an application called Layar that uses the phone’s camera and GPS capabilities to gather information about the surrounding area. Layar then shows information about restaurants or other sites in the area, overlaying this information on the phone’s screen. You can even point the phone at a building, and Layar will tell you if any companies in that building are hiring, or it might be able to find photos of the building on Flickr or to locate its history on Wikipedia.

Layar isn’t the only application of its type. In August 2009, some iPhone users were surprised to find an augmented-reality “easter egg” hidden within the Yelp application. Yelp is known for its user reviews of restaurants and other businesses, but its hidden augmented-reality component, called Monocle, takes things one step further. Just start up the Yelp app, shake your iPhone 3GS three times and Monocle activates. Using your phone’s GPS and compass, Monocle will display information about local restaurants, including ratings and reviews, on your cell phone screen. You can touch one of the listings to find out more about a particular restaurant.

Limitations and the Future of Augmented Reality

People may not want to rely on their cell phones, which have small screens on which to superimpose information. For that reason, wearable devices like SixthSense or augmented-reality capable contact lenses and glasses will provide users with more convenient, expansive views of the world around them. Screen real estate will no longer be an issue. In the near future, you may be able to play a real-time strategy game on your computer, or you can invite a friend over, put on your AR glasses, and play on the tabletop in front of you.

Patents to showcase Augmented Reality in phones

1. Apple Files a patent describing Two Viewing Modes for iPhone:

New Claim #1: A cellular telephone comprising: a speaker; a button; a camera; a connector; and a display, wherein the display is configured to operate in one of a normal viewing mode and a close up viewing mode and wherein the display is configured to operate in the close up viewing mode when the cellular telephone is connected to a head-mounted device.

Claim #1 on November 10: An apparatus operable with a removable cellular telephone comprising: a head-mounted frame that is configured to support the removable cellular telephone; and first and second lenses coupled to the head-mounted frame through which the removable cellular telephone is viewable when the removable cellular telephone is supported by the head-mounted frame. In effect all of the patent claims have been updated or added in this patent application. This time Apple places emphasis on the changing iPhone display viewing style. Below are the additional patent claims that support this new emphasis:

Claim #6: A portable electronic device comprising: a speaker; a button; a camera; a connector; and a display, wherein the display is configured to operate in one of a first display mode and a second display mode, wherein the display presents images in a first format in the first display mode, wherein the display presents images in a second format that is different from the first format in the second display mode, and wherein the display is configured to divide an image frame into a left image frame and a right image frame while operating in the second display mode.

Claim #8: The portable electronic device defined in claim 7, wherein the first display mode is a normal display mode, and wherein the second display mode is a close up display mode.

Claim #10: The portable electronic device defined in claim 9, wherein the display operates in the second display mode when it is detected that the portable electronic device is being used for close up viewing.

Claim #11: A method of operating a cellular telephone that is configured to be coupled to a head-mounted device, the method comprising: presenting images according to a first format in a normal viewing mode; detecting that the portable electronic device is coupled to the head-mounted device; and in response to detecting that the portable electronic device is coupled to the head-mounted device, presenting images according to a second format that is different than the first format.

Claim #12: The method defined in claim 11, wherein presenting images according to the first format comprises presenting images with a first resolution.

Claim #13: The method defined in claim 12, wherein presenting images according to the second format comprises presenting images with a second resolution that is different than the first resolution.

Claim #14: The method defined in claim 11, wherein presenting images according to the second format comprises receiving an image frame and dividing the image frame into a first image frame and a second image frame.

Claim #15: The method defined in claim 14, wherein presenting images according to the second format comprises presenting images according to the second format using a display in the cellular telephone.

Claim #16: The method defined in claim 15, wherein presenting images according to the second format using the display comprises directing the first image frame to a first portion of the display and directing the second image frame to a second portion of the display.

Claim #17: The method defined in claim 16, wherein the first image frame is a left image frame and wherein the second image frame is a left image frame.

2. An Augmented Reality Display for Mobile Devices

As for AR patent application 20160240119, Apple’s patent notes that “These overlays whether in handheld or other electronic devices may provide an ‘augmented reality’ interface in which the overlays virtually interact with real-world objects. For example, the overlays may be transmitted onto a display screen that overlays a museum exhibit, such as a painting. The overlay may include information relating to the painting that may be useful or interesting to viewers of the exhibit.” This is exactly what Lenovo promoted when first introducing their first Google Project Tango smartphone that will be coming to market in September. Apple’s filing further noted that “Additionally, overlays may be utilized on displays in front of, for example, landmarks, historic sites, or other scenic locations. The overlays may again provide information relating to real-world objects as they are being viewed by a user. These overlays may additionally be utilized on, for example, vehicles utilized by tourists.

For example, a tour bus may include one or more displays as windows for users. These displays may present overlays that impart information about locations viewable from the bus. Various additional augmented reality applications that may be provided via a mobile device are disclosed in U.S. application Ser. No. 12/652,725, filed Jan. 5, 2010, and entitled “SYNCHRONIZED, INTERACTIVE AUGMENTED REALITY DISPLAYS FOR MULTIFUNCTION DEVICES,” which was incorporated into their original filing.

3. Augmented-reality shopping on your phone? Patent hints at Amazon’s ambitions

It’s no secret that Amazon is intrigued by the potential applications of augmented reality for e-commerce and one of those applications is explored in a newly published patent.

Imagine that you’re shopping online for a classy watch or bracelet, and you want to get a sense for how it’ll look around your wrist. Just point your smartphone camera at your hand, and an augmented-reality app will show you the item superimposed on the camera video.

But what about the bling? The patent published today, based on an application filed back in 2013, focuses on how to add the sparkle to the virtual image of the bracelet.

The technique calls for using a three-dimensional sensor, such as Microsoft’s Kinect device, to generate a cloud of data points for the real-life object your hand, for example. The app would keep track of your hand’s position and orientation with respect to the phone, and calculate how the virtual item would reflect light in different ways as you move your hand around.

“The items may include jewelry, eyeglasses, watches, home furnishings, and so forth,” the inventors say in the application. “Users who wish to purchase these physical items may find that the experience of purchasing is enhanced by more realistic presentations of the physical items on devices.”

The data on lighting sources could be collected using the front- and rear-facing cameras on a smartphone. The app would process those readings to tweak the computer-generated view of the sale item, superimposed on the real-world view.

Did you get all that? Here are a couple of illustrations from the patent application to show how it could work. The augmented-reality app would gather information about angles of reflection to create the correct lighting conditions for a virtual view. (Amazon Illustration via USPTO. Amazon doesn’t typically comment on its patents until it releases a product, and there’s no guarantee that the augmented-reality bling will become one of the Seattle-based online retailer’s sales tools.

However, when you consider the fact that Amazon Web Services has just set up a mixed-reality tech team, and then look at the list of the company’s past patents – including a concept for turning your living room into an AR showroom – you have to conclude that Amazon’s interest in augmented reality is no illusion.

4. Augmented reality device with advanced object recognition, POI labeling

As published in United States Patent and Trademark Office, U.S. Patent No. 9,560,273, lay down the hardware frame network of an augmented reality device with enhanced computer vision capabilities, while U.S.P.T.O. 9,558,581 details a particular method of overlaying virtual information atop a given environment. Together, the hardware and software solution could provide a glimpse at Apple’s AR aspirations.

The ‘273 patent for a ” wearable information system having atleast one camera”, imagines a device with one or more camera, a screen, user interface, and and internal component dedicated to computer vision. While head mounted display are mentioned as ideal platform to the present AR invention, the filing also suggests smartphones can serve as a suitable stand-in. Apple’s present invention goes further than a simple device control, however,as it details a power efficient method of monitoring a user’s environment and providing information about the interesting object.

The document dives deep into initialization of optical tracking, or initial determination of camera’s position and orientation. An integral facet of AR, initialization is considered as one of the technology’s most difficult hurdle to overcome. Apple noticed the hurdle can be divided into three main building blocks: feature detection, feature description, and feature matching. Instead of relying on existing visual computing method, Apple proposes a new optimized technique that implements dedicated hardware and prelearned data.

Apple’s ‘581 patent, titled “Method for representing virtual information in a real environment,” also originated in Metaio’s labs and reveals a method of labeling points of interest in an AR environment. More specifically, the IP takes occlusion perception into account when overlaying virtual information onto a real-world object. Applying 2D and 3D models allows for such systems to account for a user’s viewpoint and their distance away from the POI, which in turn aids in proper visualization of digital content. Importantly, the process is able to calculate a ray between the viewing point and virtual information box or asset. These rays are limited by boundaries associated with a POI’s “outside” and “inside” walls.

Again, Apple’s patent relies on a two-camera system for creating a depth map of a user’s immediate environment. With the ‘581 patent, this depth map is used to generate the geometric models onto which virtual data is superimposed.